I have been conducting synthetic and real user performance benchmarking tests for many years.

My test results can be related to Digital Employee Experience (DEX) specifically for Desktop as a Service (DaaS) environments. But I'm not alone.

A growing number of manufacturers and service providers in the end user computing (EUC) and DaaS market claim to offer DEX products, components or cloud services.

The 2023 Gartner Market Guide for DEX Tools includes the following statement:

“Tools that measure and continually improve the digital employee experience are becoming more important as enablers of modern digital workplaces.”

This is an attractive market, but when market analyst Gartner introduced the term DEX they defined it rather generally around employees,

their experience and their use of technology.

Due to the ambiguity of the term, most vendors of DEX tools and products for DaaS environments have their own definition of DEX.

In addition, they tend to focus primarily on very technical metrics of EUC backend components or network connections,

which often have little or no meaning for the quality of the real end user experience.

In contrast, my focus is primarily on the user who is typically not interested in classic monitoring metrics such as CPU load,

network bandwidth or IOPS if more subjective quality criteria are not met.

As part of my project experience in the area of EUC Score performance benchmarking,

I have created a list of 10 user-centric DEX quality criteria for DaaS environments (DEX4DaaS), which I would like to share here.

This list below is constantly being improved and I welcome feedback with suggestions for completion.

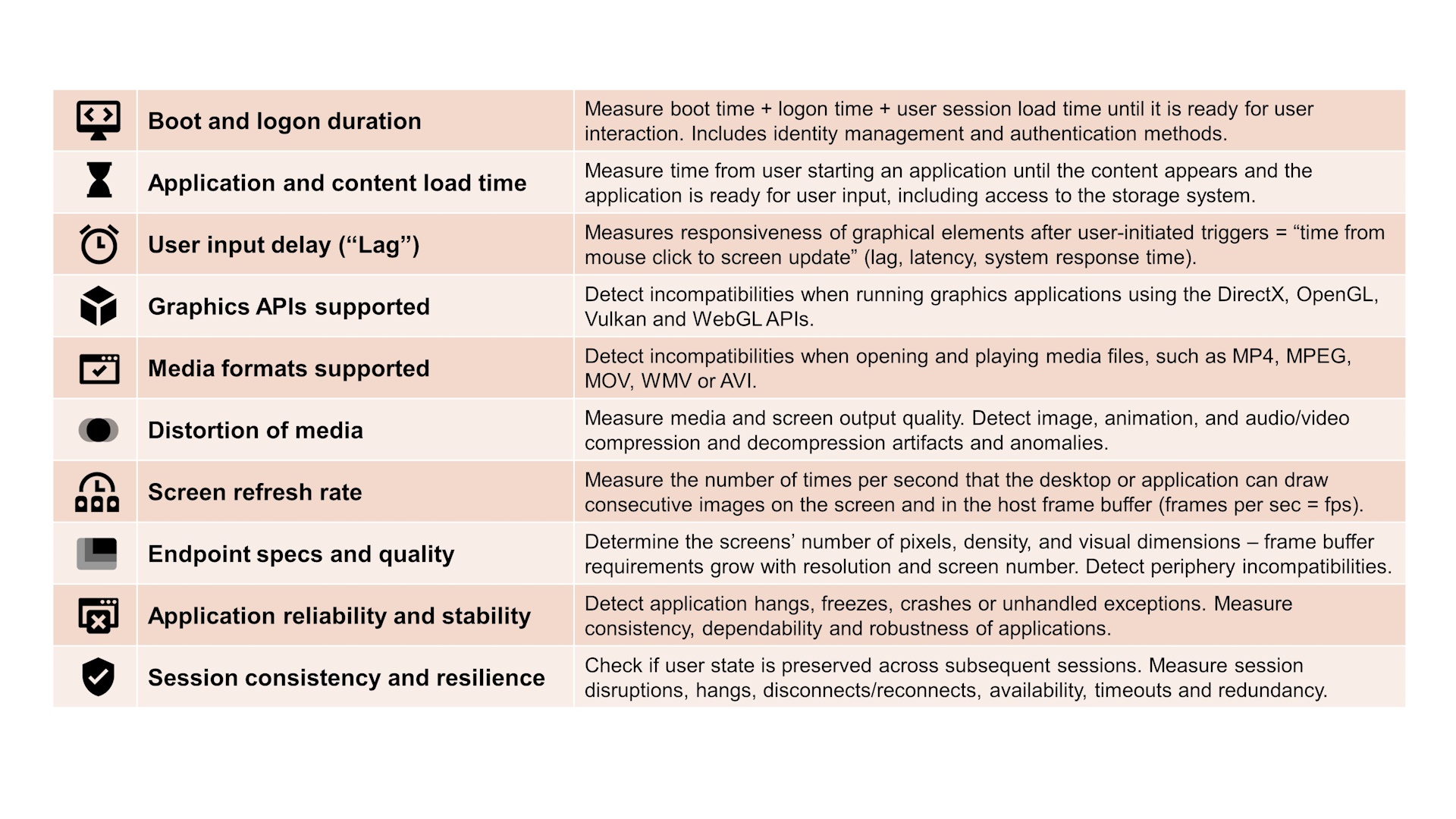

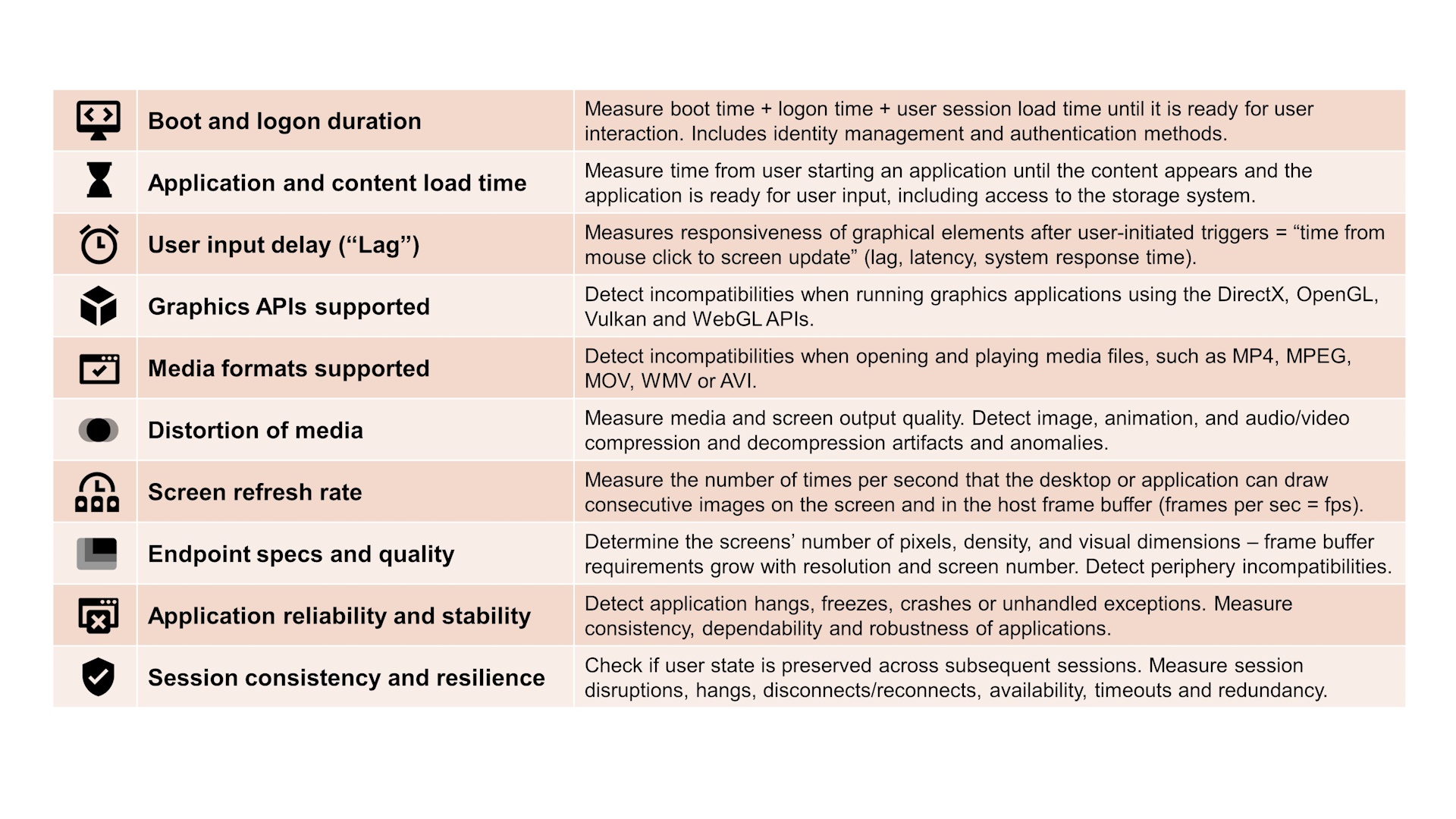

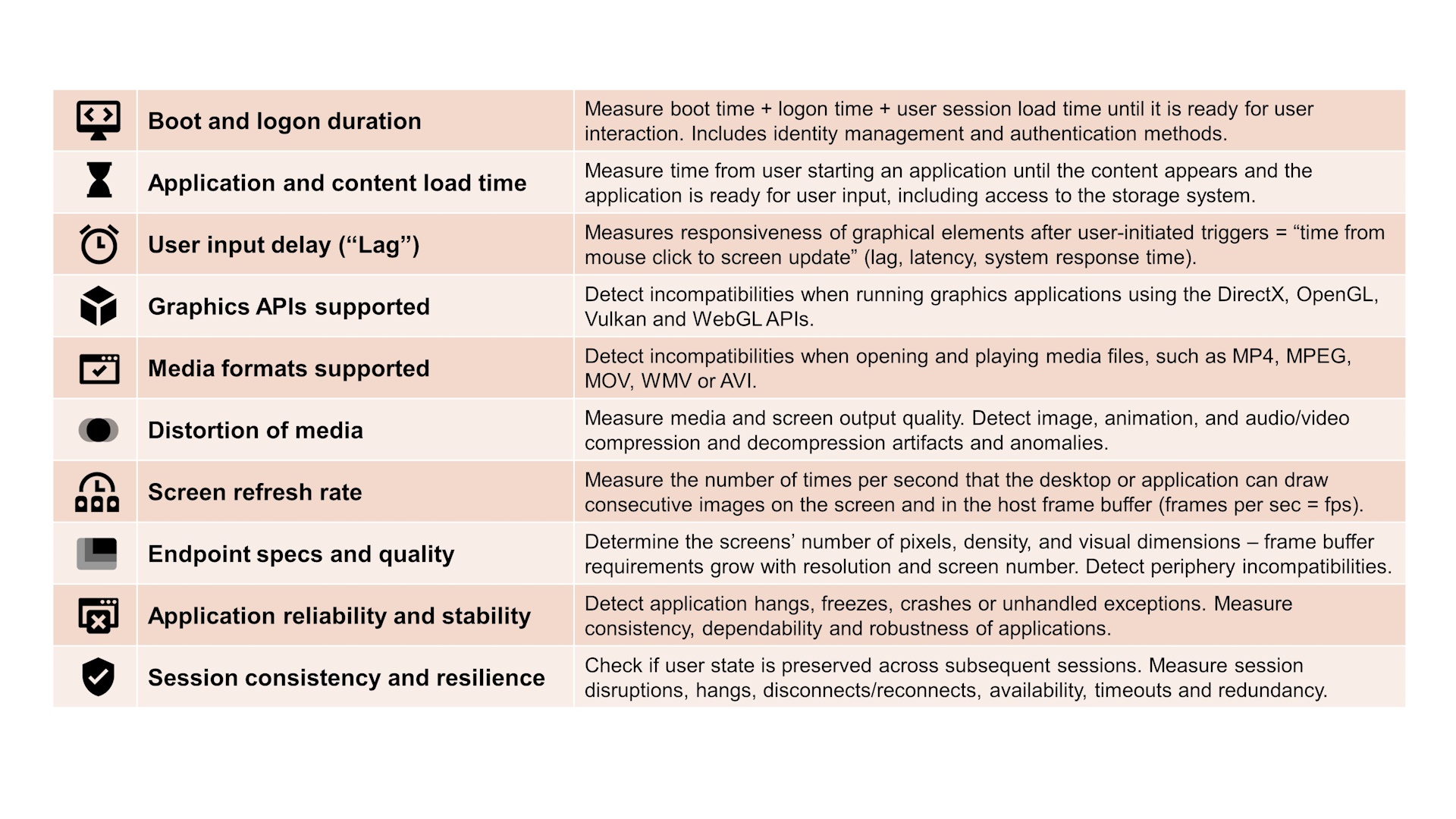

DEX Quality Criteria for DaaS Environments

- Boot and logon duration: Measure boot time + logon time + user session load time.

This is the time from turning on the endpoint device to the moment when the user can start interacting with the desktop or the published application.

This includes identity management and authentication methods.

- Application and content load time: Measure the amount of time it takes for a user mode application to start, to initialize fully,

and potentially open data files from the storage system before the user interface becomes ready for user input.

- User input delay: Measure the amount of time that passes between user input (= user-initiated triggers) and the corresponding

appearance of graphics primitives or user interface elements, also referred to as responsiveness, lag or latency.

In case of a remote desktop connection, the network's round-trip time (RTT) may play a significant role for the user input delay.

- Graphics APIs supported: Detect incompatibilities when running graphics applications using the DirectX, OpenGL, Vulkan and WebGL APIs.

- Media formats supported: Detect incompatibilities when opening and playing media files, such as MP4, MPEG, MOV, WMV or AVI.

- Distortion of output media: Measure media and screen output quality, identify out-of-sync media streams (= lack of cross-media synchronicity)

and detect image, animation, and audio/video compression and decompression artifacts and anomalies,

such as grains, color loss, blocking, tiling, pixelating, blurring, flickering, and noise.

- Screen refresh rate: Measure the number of times per second that the desktop or application is able to draw or refresh consecutive images

on the screen of the endpoint device, expressed in frames per second (fps) or Hertz (Hz).

In remote desktop scenarios, the frame rate on the sender side and on the receiver side both contribute to the overall perceived frame rate.

- Endpoint specs and quality: For the monitors, determine the resolution (number of pixels and density) as well as the visual dimensions

diagonally in inches or centimeters. Ensure that the physical endpoint device is reliable and that input and output devices connected to it are working

properly and show sufficient peripheral performance in the context of a remote desktop connection. Examples are screens, keyboard, mouse, USB devices or printers.

- Application reliability and stability: Detect application hangs, freezes, crashes or unhandled exceptions. Measure consistency, dependability and

robustness of applications (number of faults). Identify memory leaks and analyze behavior under high spikes of users.

- Session consistency and resilience: Check if the user state is preserved across subsequent sessions.

Measure user session disruptions, hangs, disconnects/reconnects. Address factors such as session availability, timeouts and redundancy.

Benny Tritsch

25 May 2024

Benny Tritsch

25 May 2024